Is Your Creative Analysis Tool Actually Reading Your Ads? An Investigation Into What Your Attention Prediction Software Isn't Telling You

In This Article You Will Learn

The difference between algorithmic prediction and deep learning AI

Why semantic text understanding changes everything

How multimodal analysis captures the full creative message

Why different formats need different success metrics

The real business cost of approximation

What 98% accuracy means for your campaigns

When your CMO asks why the €500K campaign underperformed, you pull up your attention analytics dashboard. The heatmap looked good. The attention scores were strong. The algorithm said it would work.

But here's the question no one asks in the demo: Can your tool actually read?

Not just detect text. Not just identify that there are words on the screen. But truly understand what those words mean, and how that meaning changes everything about whether your creative will convert.

If the answer makes you uncomfortable, keep reading.

The AI-Washing Problem: When Algorithms Pretend to Be Intelligence

There's a credibility crisis in marketing technology, and it starts with a single word: AI.

Every vendor claims it. Few can prove it. And for Marketing Directors allocating seven-figure budgets, the difference between a rule-based algorithm and actual deep learning AI isn't semantic. It's the difference between approximation and precision.

Let's be specific. Dragonfly AI describes their technology as a "biological algorithm" based on neuroscience research (their language, their science page). It's an impressive piece of engineering. A rule-based system that attempts to model visual attention patterns.

But it's not machine learning. It's not deep learning. It's not AI in the way that term is understood in 2025.

This matters because algorithms follow predetermined rules. They're built on assumptions. They can't learn from millions of real viewing sessions the way human attention actually behaves in the wild, across demographics, cultures, devices, and contexts. They approximate. They generalize. And in marketing, generalization is just expensive guessing.

Brainsuite uses MIT-validated deep learning models trained on massive datasets of real human attention behavior. Not neuroscience theories translated into code. Not biological modeling. Real AI that learns patterns from reality, not from rules written by engineers making educated guesses about how humans look at things.

The result? 98% accuracy validated by academic research.

That's not a marketing claim. That's peer-reviewed science.

The Accuracy Gap: Why Every Percentage Point Is the Difference Between a Successful or Bad Campaign

Let's do the business math that keeps CMOs up at night.

If your attention prediction tool relies on algorithmic approximation rather than deep learning precision, you're building strategy on guesswork. Every prediction that misses the mark isn't just wrong. It's a systemic risk to your budget.

At 98% accuracy, you're wrong twice in a hundred.

Now apply that to decisions. You're testing five creative concepts for a product launch. Budget: €500K in media spend. Your algorithmic tool confidently predicts Concept C will drive the highest attention and engagement. You commit. You launch. And three weeks into the campaign, you're underperforming benchmarks by 40% because what the algorithm thought would grab attention and what actually grabs attention live in different universes.

With a 98%-accurate tool, that €500K disaster becomes a €500K success, because you backed the creative that actually performs, not the one that fits the algorithm's assumptions.

Here's the uncomfortable truth: Every time you make a creative decision based on approximation instead of precision, you're playing Russian roulette with your budget. And in a world where CFOs are demanding ROI transparency on every euro spent, approximation doesn't just underperform. It ends careers.

The accuracy gap between algorithmic approximation and MIT-validated deep learning isn't a technical detail. It's the margin between hitting your KPIs and explaining to the board why you didn't.

"I had a look at one of Brainsuite's competitors as well. When we gave them different pictures of POS material that we have used in the market, it was giving us the wrong suggestions, meaning the weaker solution would be the preferred one." — Moritz Patzke, Director Digital Transformation & Data Strategy at Lavazza

The Text Blindness That Costs Half Your Message

Now we get to the problem that should terrify any marketer running copy-driven campaigns.

Most attention prediction tools can detect text. They see that there are words in a specific location. They know a headline exists. But they can't understand what those words mean, and that semantic blindness costs you half your message.

Let me show you what this looks like in practice.

Scenario 1: The Headline That Changes Everything

You're testing two versions of a display ad for a travel insurance brand. Identical visual: family on beach at sunset. Same layout. Same logo placement. The only difference is the headline.

Version A: "Travel Insurance Starting at €4.99/Month"

Version B: "What Happens When Your Flight Gets Canceled Abroad?"

A tool that can't understand text meaning will give you nearly identical attention predictions. After all, the location of the headline is the same. The visual weight is the same. The algorithm sees: big text at top, body copy below, CTA button bottom right. Pattern match complete.

But any human marketer knows these headlines trigger completely different psychological responses. Version A is informational and price-focused. Version B taps into anxiety and emotional urgency. One appeals to planners. One appeals to worriers. They'll perform radically differently depending on your audience, but your algorithm can't tell the difference because it can't read.

Brainsuite processes semantic meaning. It understands that "What happens when..." is a fear-based pattern trigger. It knows that urgency language changes attention behavior. It doesn't just see text. It comprehends how that text alters the viewer's cognitive processing of the entire ad.

That's not a feature. That's the difference between prediction and guessing.

Based on imprecise heatmaps, these vendors claim to predict eye gaze. The use of hotspot ranking claims that this will predict how the eye is moving along the asset. As the example above shows, this 'hack' is not valid: start at the 'z', go to 'g', jump to the 'P' and go back to the 'z' is not how humans will process this asset. Ranking of hotspots is NOT predicting eye gaze.

Scenario 2: The TikTok Ad That Lives in Three Dimensions

Here's where text blindness becomes financially catastrophic: short-form video.

You're launching a new fintech app aimed at Gen Z. Your creative team produces a 15-second TikTok ad. The video shows a young woman stressed about bills, then relieved as she uses your app. Simple story. Strong visual narrative.

But that's only one layer of the message.

There's text overlay: "When rent is due but payday is Friday 💀"

There's a voiceover: "Stop stressing. Get paid early with FlexPay."

There's background music: upbeat electronic track that shifts from tension to resolution.

Now here's the test: Which element drives conversion? The visual story? The relatable text overlay that makes Gen Z users tag their friends? The voiceover that delivers your value proposition? The audio cue that signals emotional release?

If your attention tool can't process semantic text meaning, can't analyze voiceover content, and can't evaluate audio emotional cues, it's only analyzing 30% of your creative. It's predicting attention based on the visual story alone while completely ignoring the text, spoken word, and audio that Gen Z viewers actually process simultaneously.

You're making a six-figure media buy based on one-third of the information.

Brainsuite is multimodal. It processes:

Written text (semantic meaning, emotional valence, urgency patterns)

Spoken text (voiceover content, tone, pacing)

Visual content (scene-by-scene video analysis, not just static frames)

Audio cues (music, sound effects, emotional signaling)

Because that's how humans actually consume content in 2025. Not in isolated channels. Not one sense at a time. All together, all at once, with meaning that emerges from the combination of elements.

If your current tool is only analyzing visuals, you're not predicting attention. You're predicting what someone with the sound off and no ability to read might notice. And that's not your customer.

Scenario 3: The Packaging That Talks Past the Shopper

Walk down any grocery aisle and you'll see the text hierarchy problem in three dimensions.

Your brand is launching a premium organic pasta. The package design includes:

Brand logo (top left, moderate size)

Product name "Ancient Grain Fusilli" (center, large)

Benefit callout "12g Protein Per Serving" (right side, bold)

Sustainability message "100% Regenerative Farming" (bottom, smaller but in green)

A tool that can't understand text meaning treats all of these as equivalent visual elements. It might tell you the "Ancient Grain Fusilli" text gets the most attention because it's largest and centered. Technically accurate. Strategically useless.

Because here's what actually happens in-store: A health-conscious millennial scans the aisle, sees "12g Protein" and stops. That benefit callout is the conversion trigger, not the product name, not the logo. Meanwhile, a sustainability-focused Gen Z shopper sees "100% Regenerative Farming" and that's their decision point. The same package, the same text elements, but the meaning of specific words drives completely different attention and purchase behavior.

Brainsuite doesn't just see text at different locations. It understands that "12g Protein" is a rational benefit trigger, that "Regenerative Farming" is a values-based trust signal, that "Ancient Grain" is a premium quality indicator. It predicts not just where people look, but why they look, and that "why" is embedded in semantic understanding.

When you're presenting packaging concepts to retail buyers or optimizing shelf placement, do you want a tool that tells you which text is biggest? Or one that tells you which text drives purchase intent for your target demographic?

The answer determines whether you win the shelf space or lose it to a competitor whose tool can actually read.

One-Size-Fits-All Is One Size Too Simple

Here's a test of your current attention prediction tool: Does it give you different KPIs for a TikTok ad, a highway billboard, and retail packaging?

If the answer is no (if it's using the same "attention score" or "engagement prediction" across all formats), you're using a tool built for convenience, not accuracy.

Because success looks completely different across channels:

A 3-second TikTok hook needs to stop the scroll within 0.8 seconds. The KPI isn't total attention. It's immediate visual disruption and fast emotional payoff. If viewers don't feel something in three seconds, they're gone. Your attention tool should be measuring hook strength, scroll-stopping power, and emotional escalation speed.

A highway billboard at 110 km/h has approximately between 2-6 seconds of viewability. The KPI is instant brand recall and single-message clarity. Complex layouts fail. Multiple CTAs fail. Your tool should be optimizing for glanceability, not engagement depth.

Retail packaging on a crowded shelf competes with 47 other products in a 4-second scan. The KPI is differentiation and benefit communication speed. Can a shopper identify your category, understand your unique value, and locate your product again after looking away? That's not the same as "attention." That's shelf conversion architecture.

Generic attention scores collapse all of this nuance into a single number. They tell you that your creative "scores 78" without telling you whether that's good enough to stop a scroll, survive a highway glance, or win a shelf battle.

Brainsuite delivers asset-specific and channel-specific KPIs because there is no such thing as universal creative success. A brilliant billboard is a terrible TikTok ad. A scroll-stopping social post is an illegible highway disaster. And a one-size-fits-all prediction tool is a budget-wasting approximation machine.

When you're A/B testing five packaging concepts for a retail launch, do you want a tool that says "Concept B scores highest" or one that says "Concept B has 34% higher benefit message comprehension speed and 28% better brand differentiation in 4-second shelf scans among your core 25-44 demographic"?

One of those insights helps you win. The other helps you feel confident while you lose.

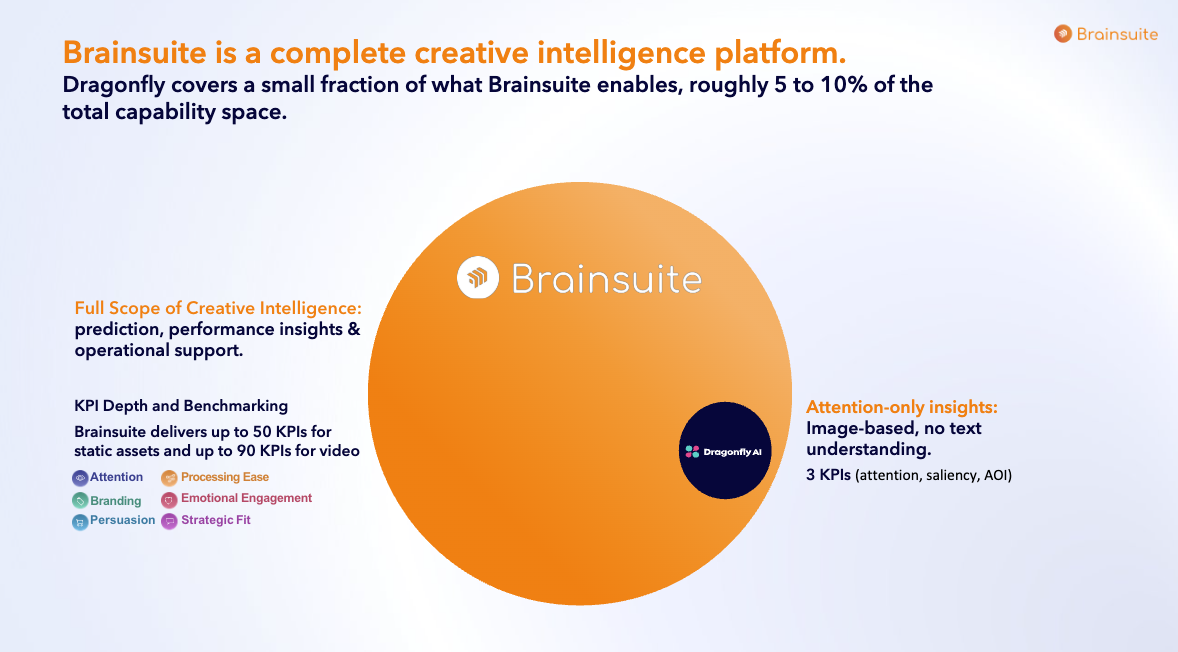

Brainsuite is a complete creative intelligence platform. Dragonfly covers a small fraction of what Brainsuite enables, roughly 5 to 10% of the total capability space.

The Real Cost of Approximation: A €500K Case Study

Let's make this concrete with a scenario that plays out in marketing departments every quarter.

You're the Marketing Director for a challenger brand in consumer electronics. Q4 launch: premium wireless earbuds targeting fitness enthusiasts. Budget: €500K across paid social, display, and retail.

Your creative team delivers five concepts. You need to pick one and commit. This is where your attention prediction tool either saves your quarter or ruins it.

Concept A features a runner mid-stride, earbuds visible, headline: "Never Lose Your Rhythm." Strong visual, aspirational messaging.

Concept B shows a close-up of the earbuds with water droplets, headline: "Sweat-Proof. Rain-Proof. Unstoppable." Product-focused, benefit-driven.

Your current tool (let's say it's using algorithmic prediction) analyzes both and gives high scores to Concept A. The reasoning: the human figure creates strong focal point, the action shot implies use case, the composition follows proven attention patterns. It predicts 82% engagement likelihood. You feel good about the data. You commit €500K to Concept A.

Three weeks into the campaign, you're underperforming. Click-through rates are 35% below forecast. Conversion rates are anemic. You pull the campaign, run emergency creative testing, and discover the problem: Your audience doesn't want aspiration. They want proof. They're not buying based on how they want to feel; they're buying based on whether the product solves their sweat-drenched earbuds problem. Concept B, the one your tool undervalued, tests 60% higher in actual market performance.

Now let's replay this with Brainsuite's multimodal intelligence:

Brainsuite analyzes both concepts and identifies that Concept B's text ("Sweat-Proof. Rain-Proof. Unstoppable.") contains three specific benefit triggers that map to high-intent search patterns in your fitness demographic. It processes that the water droplets aren't just visual elements; they're proof signals that reinforce the claim. It recognizes that "Unstoppable" is an identity-alignment word that fitness enthusiasts respond to more strongly than "Rhythm."

More importantly, it predicts that for paid social (your primary channel), benefit-driven product shots outperform lifestyle shots in this category by 43% because fitness enthusiasts are problem-solvers, not dreamers. They scroll past inspiration. They stop for solutions.

You commit €500K to Concept B. You hit KPIs. You scale. Your Q4 numbers make you a hero instead of a cautionary tale.

That's the cost of approximation: not just underperformance, but opportunity cost. The right creative you didn't choose because your tool couldn't read it properly. The budget you wasted on approximation instead of investing in precision.

How many times can your brand afford to be wrong before the CFO starts asking why you're still using tools that guess instead of know?

Brainsuite's Multimodal Intelligence: What Precision Actually Looks Like

Brainsuite is a complete creative intelligence platform

Here's what changes when your creative analysis tool can actually process meaning:

Semantic Text Understanding: Not just "there's a headline here" but "this headline triggers loss aversion" or "this CTA uses social proof language patterns."

Voiceover Content Analysis: What's being said, how it's being said, and how spoken messaging interacts with visual content to create (or destroy) coherence.

Scene-by-Scene Video Intelligence: Not static frame analysis, but understanding of narrative progression, emotional arcs, and attention retention across time.

Audio Emotional Cuing: How music, sound effects, and tonal shifts influence viewer emotional state and decision-making.

Context-Specific KPI Delivery: Different success metrics for TikTok vs. YouTube vs. billboards vs. packaging because success means different things in different contexts.

MIT-Validated 98% Accuracy: Not a claim. Not marketing copy. Peer-reviewed scientific validation that the predictions you're relying on actually match real-world human attention behavior.

This isn't about feature lists. It's about whether the tool you're trusting with budget-defining decisions is sophisticated enough to analyze creative the way your customers actually experience it: holistically, semantically, emotionally, contextually.

Because marketing in 2025 isn't about who has the prettiest dashboard. It's about who makes the right call when €500K is on the line.

The Question You Should Be Asking

If your current attention prediction tool can't read what your ads say, can't understand what your voiceovers mean, and gives you the same KPIs for TikTok as it does for billboards, what decisions are you making based on incomplete information?

More importantly: What's that incompleteness costing you?

The answers aren't comfortable. But they're necessary. Because in a world where creative performance separates market leaders from market casualties, approximation isn't just inadequate. It's expensive.

Ready to see the difference? Run a side-by-side comparison. Take your current creative. Analyze it with your existing tool. Then analyze it with Brainsuite. See what you've been missing. See what semantic understanding reveals. See what 98% accuracy looks like when it's applied to your actual campaigns.

Request a comparison demo and discover what happens when your creative analysis tool can finally read.